The Dark Side of AI Content & How to Use It Safely

Aug 26, 2025 | 3 Min Read | 1086 Views

AI-generated content offers powerful creative possibilities but also comes with serious risks. It can homogenize creativity, spread misinformation, amplify biases, and undermine authenticity. This article explores the dark side of AI-generated content and highlights safe-use principles such as transparency, human oversight, contextual deployment, and legal accountability to ensure AI serves as a supportive tool rather than a threat to truth and human expression.

Background

We stand at the edge of a creative revolution. Artificial intelligence has democratized content creation, allowing anyone to produce professional-quality images, videos, and text within minutes. ChatGPT crafts compelling prose, Midjourney generates stunning visuals, and deepfake technology produces realistic videos of people saying things they never said. While this technological marvel has the potential to amplify human creativity like never before, it conceals a more troubling reality.

Despite society’s admiration for AI’s creative capabilities, we have underestimated the systemic risks of widespread AI-generated content. These dangers go beyond mere misuse or deliberate deception they threaten the very foundations of truth, creativity, and human expression. The reality is stark: AI-generated content, while offering undeniable benefits, poses significant risks to intellectual authenticity, epistemic integrity, and creative diversity, demanding immediate and comprehensive attention.

The Illusion of Creativity

A common myth is that AI-generated content is truly creative. In reality, AI doesn’t create it recombines existing human work. Every “original” image, story, or piece of music is a sophisticated remix, filtered through algorithms that mask its derivative nature.

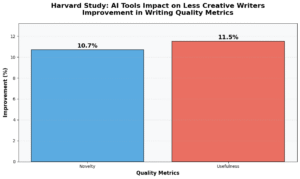

Research from Harvard and MIT highlights this paradox. A study in Science Advances found that AI-assisted stories scored higher in novelty (8.1%) and usefulness (9.0%) when writers used five AI-generated ideas. Yet, these stories were more similar to each other than human-only works. AI may boost individual creativity but reduces collective novelty.

The impact extends beyond aesthetics. AI flooding creative markets from stock photos to marketing copy creates a feedback loop of mediocrity. Genuine human expression becomes rarer because derivative AI work is more efficient, making original creativity seem less practical.

Worryingly, reliance on AI can weaken human creative skills. Less creative writers showed the largest gains, but this raises a critical question: are we creating a generation of creators who cannot function without algorithms? AI may appear to enhance creativity, but it risks becoming a crutch, narrowing the overall creative landscape.

Misinformation at Scale

AI can now produce highly convincing yet false content. Security.org’s 2024 deepfake analysis reports a 1,000% increase in fraud incidents from 2022 to 2023, with losses up to 10% of companies’ annual profits. Just three seconds of audio can create an 85% voice match, and 70% of people cannot reliably tell real from cloned voices.

False information spreads six times faster than truth on social media. The top 1% of Twitter rumors reach 1,000–100,000 people, while factual news rarely exceeds 1,000. Combined with AI’s ability to generate endless variations, this poses an unprecedented threat to truth.

High-profile cases illustrate the risk: a deepfake of a CFO led to a $25 million fraud transfer, and AI-generated robocalls in New Hampshire suppressed thousands of voters- all cheaply and quickly produced.

The real danger lies in industrialized AI misinformation. Every AI-generated article, voice, or social post blurs the line between authentic and artificial, eroding public trust. Without robust safeguards, platforms and creators are not just adopting tools they are undermining informed discourse and the very foundation of shared reality.

Ethical Blind Spots

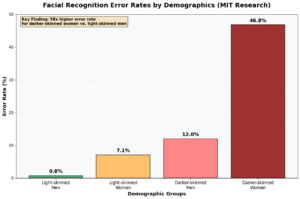

The bias embedded in AI-generated content is not a bug—it is a feature. AI systems reflect and amplify the biases present in their training data with algorithmic precision and unprecedented scale. Research from MIT has documented systematic bias in commercial facial recognition systems, with error rates for darker-skinned women reaching as high as 46.8%, while error rates for light-skinned men never exceeded 0.8%.

These disparities are not technical accidents but inevitable consequences of training AI systems on data that reflects historical inequalities and systemic discrimination. According to research published in Nature, when AI systems are trained on biased datasets, they don’t simply reproduce existing biases they systematically amplify them.

The corporate response to these documented biases reveals the depth of the ethical crisis. Despite years of research documenting systematic discrimination in AI systems, the promised solutions algorithmic bias mitigation, diverse training datasets, fairness-aware machine learning have largely failed to address fundamental problems. A 2024 MIT study found that purported bias reduction techniques often simply shift discrimination rather than eliminate it, creating new forms of algorithmic unfairness while providing corporations with the veneer of ethical compliance.

This represents corporate theater, not genuine protection. Technology companies invest billions in “AI ethics” initiatives while simultaneously deploying systems that perpetuate and scale discriminatory practices. The rhetoric of bias mitigation serves primarily to deflect criticism while maintaining the profitable status quo of biased AI systems.

The ethical implications extend beyond individual discrimination to collective representation. AI-generated content systematically underrepresents marginalized voices, perspectives, and experiences because these communities are underrepresented in training data. When AI systems generate content about social issues, historical events, or cultural practices, they embed and amplify dominant cultural narratives while marginalizing alternative viewpoints. This is not neutral technology—it is ideological reproduction disguised as objective content generation.

Intellectual Property & Authenticity Crisis

AI-generated content has shattered traditional frameworks of intellectual property and creative attribution. The fundamental question is not whether AI companies have technically violated copyright law, but whether they have systematically undermined the entire concept of creative ownership.

Current AI training practices involve scraping and processing millions of copyrighted works without permission or compensation. According to Harvard Business Review’s analysis of generative AI intellectual property issues, this practice creates “infringement and rights of use issues, uncertainty about ownership of AI-generated works, and questions about unlicensed content.” Harvard Business Review The U.S. Copyright Office has received over 10,000 comments on AI copyright issues, indicating the scale of legal uncertainty.

The conventional framing of this issue—focusing on legal technicalities and potential licensing schemes—misses the deeper crisis. The real theft is not from artists, but from audiences who are systematically deprived of authentic human expression. When AI-generated content floods creative markets, it creates an authenticity crisis where genuine human creativity becomes indistinguishable from algorithmic recombination.

This represents more than economic disruption—it constitutes cultural appropriation at industrial scale. AI systems extract the creative labor of millions of human artists, writers, and creators, then repackage and redistribute that creativity without acknowledgment or compensation. The resulting content may be technically legal, but it represents a fundamental violation of creative integrity.

The collapse of attribution norms extends beyond individual creators to entire cultural traditions. AI systems trained on diverse cultural content can generate works that superficially resemble traditional art forms, music styles, or literary traditions without any understanding of their cultural significance or historical context. This creates a form of algorithmic colonialism where traditional knowledge and creative practices are extracted, processed, and redistributed by technology companies based primarily in Silicon Valley.

Safe Use Principles

Despite these risks, AI-generated content tools are not inherently evil they are powerful technologies that demand responsible governance. Effective risk mitigation requires four core principles:

Transparency through mandatory labeling represents the foundation of safe AI content practices. The European Union’s AI Act, which entered into force in August 2024, requires providers to clearly mark AI-generated content, particularly when it could be mistaken for human-created work. European Union California’s AI Transparency Act, taking effect in January 2026, goes further by requiring AI companies to provide free detection tools for identifying AI-generated content. Mayer Brown

Human oversight and editorial review must remain mandatory for any AI-generated content intended for public consumption. This means requiring human editors to review, verify, and approve AI-generated content before publication. The goal is not to eliminate AI assistance, but to ensure human accountability and judgment remain central to content creation processes.

Contextual deployment involves using AI as a tool for assistance rather than replacement. Research shows that AI tools provide the greatest benefit to less experienced creators while offering minimal improvement to highly creative individuals. This suggests AI should be positioned as a learning aid and creative enhancement tool rather than a substitute for human creativity.

Legal accountability requires holding human creators and publishers legally responsible for AI-generated content they deploy. This includes liability for biased, discriminatory, or harmful content produced by AI systems, regardless of the specific technical mechanisms involved. Clear legal responsibility creates incentives for careful, ethical AI deployment.

Conclusion

AI-generated content offers benefits democratized creation, productivity, and new forms of expression but also poses serious risks. It can homogenize creativity, spread misinformation, amplify biases, and undermine intellectual authenticity. These are not hypothetical concerns; they are observable realities.

AI is not inherently creative or neutral; it amplifies existing power structures and inequalities. Responsible use requires transparency, human oversight, contextual application, and legal accountability.

The future of human creativity depends less on AI advances and more on our ability to preserve authentic expression. The key question is not whether AI can be more creative, but whether we can safeguard space for genuine human thought in an increasingly algorithm-driven world. The stakes are high: preserving truth, originality, and the irreplaceable value of human creativity.

Curious about turning AI insights into real growth? Don’t miss my course AI-Driven Growth Hacking in Digital Marketing it’s designed to help you put ideas into action.

References

- Doshi, A. R., & Hauser, O. P. (2024). Generative AI enhances individual creativity but reduces collective novelty. Science Advances, 10(24).

- Koivisto, M., & Grassini, S. (2023). Best humans still outperform artificial intelligence in a creative divergent thinking task. Nature Scientific Reports, 13, 13673.

- Buolamwini, J., & Gebru, T. (2018). Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification. MIT News.

- Franceschelli, G., & Musolesi, M. (2023). Generative AI Has an Intellectual Property Problem. Harvard Business Review.

- S. Copyright Office. (2024). Copyright and Artificial Intelligence.

- European Union. (2024). AI Act | Shaping Europe’s digital future.

- Mayer Brown. (2024). New California Law Will Require AI Transparency and Disclosure Measures.

- Bellaiche, L., et al. (2023). Humans versus AI: whether and why we prefer human-created compared to AI-created artwork. Cognitive Research, 8, 42.

- Crawford, K., & Schultz, J. (2023). Generative AI Is a Crisis for Copyright Law. Issues in Science and Technology.